What is Portkey?

Portkey is an LLM Gateway that connects your applications to 200+ AI models through a single API. It monitors costs and performance metrics, manages API keys securely, and implements reliability features like fallbacks and load balancing. This helps AI developers and teams build stable, cost-efficient applications without worrying about provider-specific integration challenges.

What sets Portkey apart?

Portkey differentiates itself with a unified interface that lets AI developers connect to any language model without changing their code base. The platform's tracing capability helps teams pinpoint issues by tracking model performance across specific user groups or features. Its semantic caching system cuts costs by up to 50% through storing responses for similar queries and serving them directly from memory.

Portkey Use Cases

- Unified LLM integration

- Performance monitoring

- Request load balancing

- Cost optimization

- Prompt management

Who uses Portkey?

Features and Benefits

- Connect to over 250 LLM providers through a unified API with fallbacks, load balancing, and caching capabilities.

AI Gateway

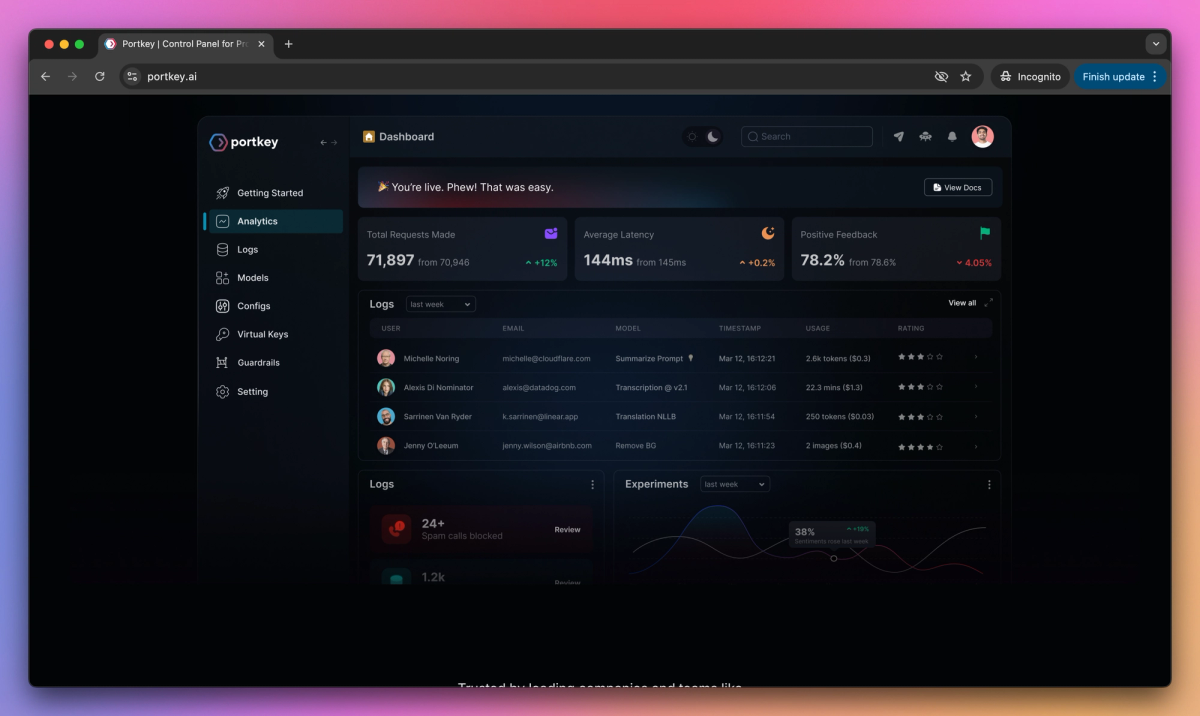

- Monitor costs, quality, and latency with 40+ metrics and detailed logs to debug and optimize AI performance.

Observability Suite

- Build, version, and deploy prompts collaboratively with multi-environment support and access control.

Prompt Management

- Run synchronous checks on LLM behavior to ensure reliable and consistent AI responses.

Guardrails

- Make agent frameworks production-ready with native integrations for Langchain, CrewAI, and Autogen.

Agent Workflows

Portkey Pros and Cons

Dedicated support team provides fast and responsive assistance

Simple integration requiring minimal code changes

Comprehensive analytics dashboard tracks costs and usage effectively

Caching system significantly reduces redundant API calls and costs

Real-time guardrails ensure data safety and reliability

Interface occasionally experiences bugs and glitches

Complex feature set can overwhelm new users

Pricing structure may be expensive for small organizations

Documentation needs improvement in some areas

Pricing

10k recorded logs per month

AI Gateway

Observability

Prompt Management

Simple Caching

Deterministic Guardrails

Community Support

100k recorded logs per month

+$9 overages per additional 100k requests

AI Gateway (Universal API, Fallbacks, Load Balancing, Retries)

Observability (Logs, Traces, Feedback, Metadata, Filters, Alerts)

Guardrails (LLM & Partner Guardrails)

Prompt Management (Unlimited Templates, Playground, API Endpoints, Versioning, Variables)

Security (Role-Based Access Control, Service Account API Keys)

Production Support

Simple & Semantic Caching

10 Mn Plus Recorded logs per month

Custom Retention Periods for Logs & Metrics

Custom Guardrail Hooks, Advanced Evaluation Templates

Governance (Role-Based Access Control, SSO, Granular Budget & Rate Limits)

Enterprise Essentials (Private Cloud Deployment, Data Export to Data Lakes, VPC Hosting, Advanced Compliance, Custom BAAs, Data Isolation)

Dedicated Onboarding & Priority Support