What is LangChain?

LangChain is a composable framework for building context-aware applications with large language models. It connects LLMs to external data sources, manages multi-agent workflows, and implements custom AI reasoning paths to help developers create applications that go beyond simple text generation. Software engineers and AI practitioners use LangChain to build retrieval systems, autonomous agents, and data-integrated chatbots without writing repetitive integration code.

What sets LangChain apart?

LangChain distinguishes itself with its modular architecture that allows developers to customize how AI agents interact with both structured and unstructured data sources. The framework's state management system gives engineers precise control over AI application behavior, even enabling supervised decision paths where humans can approve critical actions. Software teams value LangChain's extensible plugin system which simplifies integration of specialized tools and domain-specific functionality into their AI workflows.

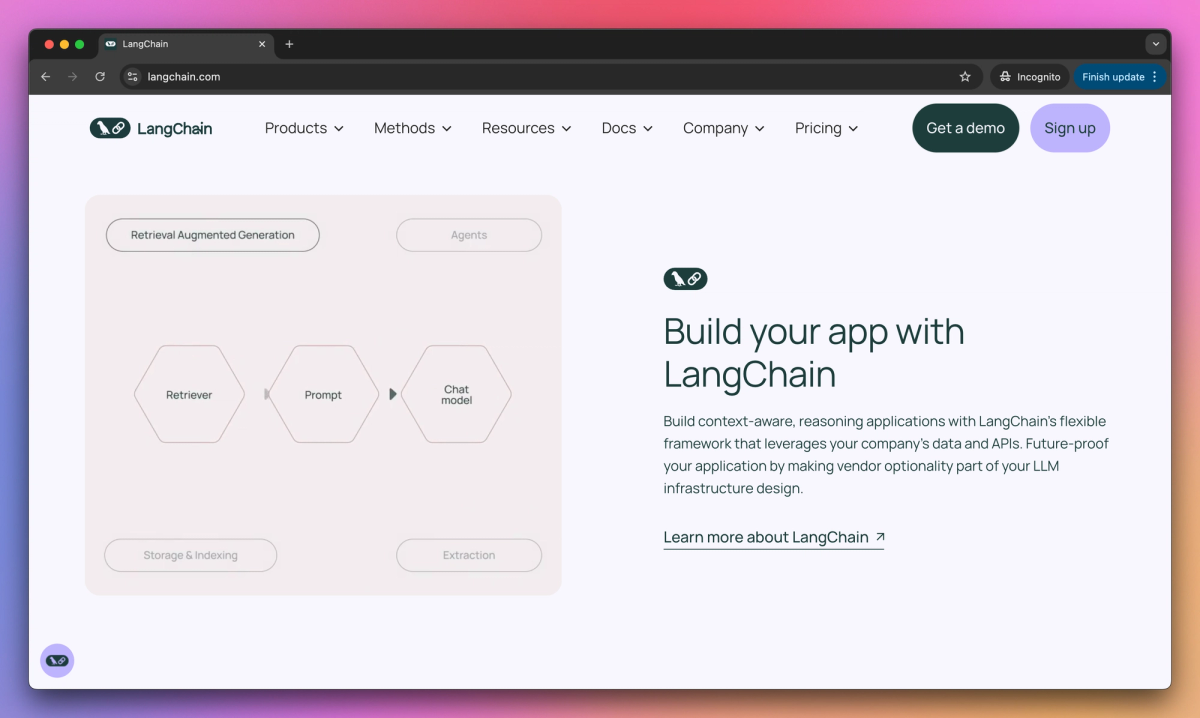

LangChain Use Cases

- Building AI agents

- Document retrieval & RAG

- Contextual conversations

- Output structuring

- LLM application development

Who uses LangChain?

Features and Benefits

- Build context-aware, reasoning applications with a flexible framework that leverages your company's data and APIs.

Composable Framework

- Connect with numerous AI model providers through standardized interfaces that allow for easy component swapping.

Provider Integrations

- Create custom agent workflows with LangGraph for controlled execution, human-in-the-loop interactions, and multi-agent collaboration.

Agent Orchestration

- Debug, test, and monitor LLM applications with LangSmith's observability tools for faster development cycles.

Development Platform

- Deploy LLM applications with fault-tolerant scalability using purpose-built infrastructure for agents.

Scalable Deployment

LangChain Pros and Cons

Simplifies complex AI development tasks for users with basic technical knowledge

Seamlessly integrates with multiple LLM providers and tools

Clean documentation makes implementation straightforward

Speeds up development time for AI applications

Highly composable architecture allows flexible customization

Version dependency conflicts cause frequent implementation issues

New versions sometimes remove previously available features

Performance can be slower compared to some alternatives

Function calling capabilities have notable restrictions

Pricing

Maximum 1 seat

Single workspace under Personal Organization

First 5k base traces free per month

Monthly, self-serve billing

Up to 10 seats

Up to 3 workspaces per Organization

Enhanced usage limits with 10k free traces per month

Monthly, self-serve billing

Advanced administration and security options

Custom deployment and billing options (annual invoicing)

Dedicated customer success manager

Custom pricing terms