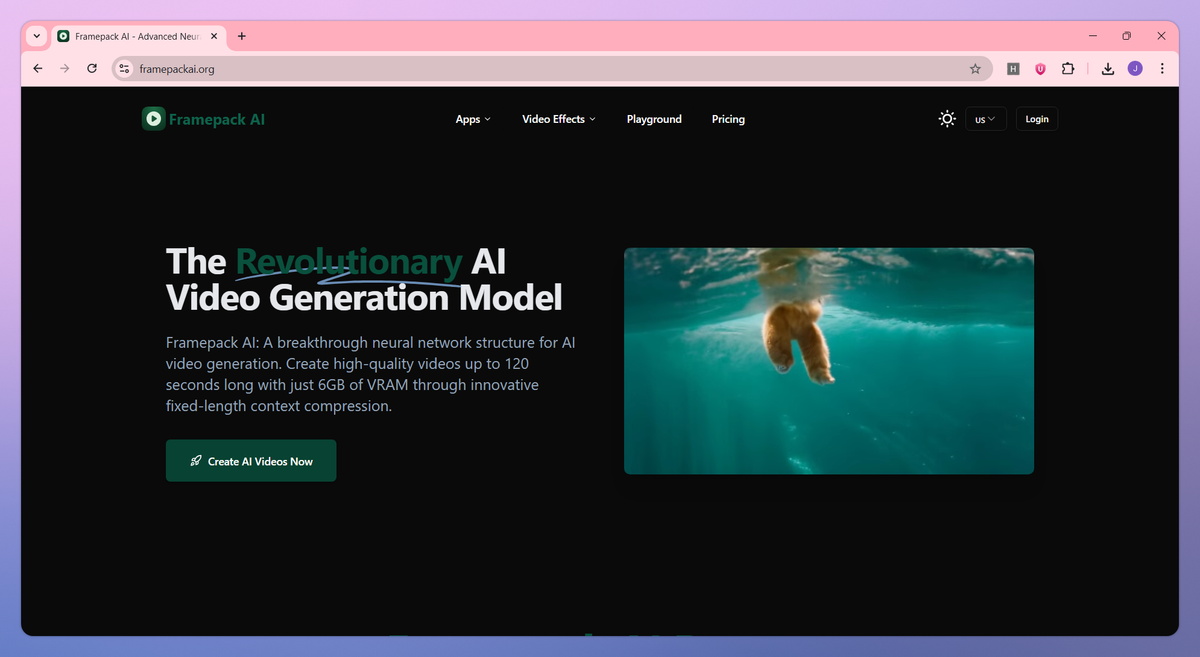

What is Framepack AI?

Framepack AI is a neural network structure for AI video generation that uses next-frame prediction technology to create long-form videos. It compresses input frames into fixed-length context notes, generates videos up to 120 seconds at 30fps with just 6GB of VRAM, and maintains consistent quality through anti-drift mechanisms that help content creators, filmmakers, and animators produce professional video content without expensive hardware requirements.

What sets Framepack AI apart?

Framepack AI sets itself apart with its open-source architecture and active community ecosystem, giving independent creators and developers direct access to the underlying code and model weights. This community-driven approach proves beneficial for small studios and individual developers who want to modify the system for specific projects, integrate it with existing workflows through platforms like ComfyUI, or contribute to ongoing development. The tool's commitment to remaining freely accessible while fostering collaborative development makes it a standout choice for creators who prefer transparent, community-supported tools over proprietary black-box services.

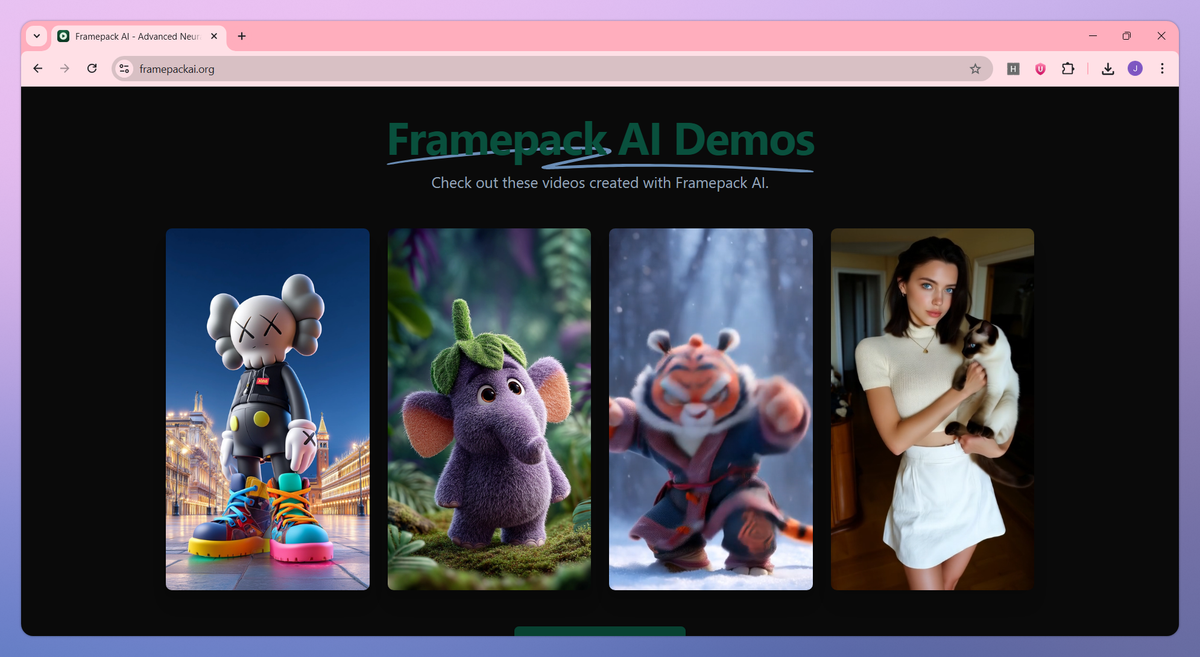

Framepack AI Use Cases

- Image to video conversion

- Long-form video generation

- Animation creation

- Social media content

- Marketing video production

Who uses Framepack AI?

Features and Benefits

- Creates videos up to 120 seconds long by compressing input frames into fixed-length context 'notes', preventing memory usage from scaling with video length.

Fixed-Length Context Compression

- Generates high-quality videos with only 6GB of VRAM, compatible with RTX 30XX, 40XX, and 50XX series NVIDIA GPUs.

Low Hardware Requirements

- Maintains consistent quality throughout long videos using progressive compression and differential handling of frames by importance.

Anti-Drift Technology

- Processes frames at approximately 2.5 seconds per frame on RTX 4090 GPUs, with optimization options to further reduce generation time.

Efficient Generation

- Provides free access to the complete codebase and models on GitHub, developed by the creator of ControlNet and a Stanford professor.

Open-Source Access

- Supports various attention technologies including PyTorch attention, xformers, flash-attn, and sage-attention for flexible optimization.

Multiple Attention Mechanisms

Pricing

500 credits

Valid for 1 month

8 Video generations

Access to advanced models

Private generation

Prompt optimization tools

Commercial License

Priority support

1200 credits

+140%

Valid for 1 month

20 Video generations

Access to advanced models

Private generation

Prompt optimization tools

Commercial License

Priority support

3600 credits

+200%

Valid for 3 months

60 Video generations

Access to advanced models

Private generation

Prompt optimization tools

Commercial License

Priority support