What is DeepKeep?

DeepKeep is an AI-Native Security platform that protects AI applications for large corporations using AI, GenAI, and LLM. It safeguards AI throughout its lifecycle, from risk assessment to threat prevention, helping companies create bold AI models while defending against data leakage and toxic responses.

What sets DeepKeep apart?

DeepKeep sets itself apart with its AI-Native security platform, specifically designed to safeguard the entire AI lifecycle for corporations integrating advanced AI technologies. This comprehensive protection is valuable for businesses aiming to push the boundaries of AI innovation while maintaining robust security measures. By enabling the creation of bold AI models without compromising on safety, DeepKeep offers a unique approach to balancing AI advancement and risk management.

DeepKeep Use Cases

- AI model risk assessment

- LLM security protection

- Data leakage prevention

- Bias detection in AI

- AI trustworthiness evaluation

Who uses DeepKeep?

Features and Benefits

- Safeguards AI applications throughout their entire lifecycle, from development to deployment, using AI-powered protection against various threats and vulnerabilities.

AI-Native Security

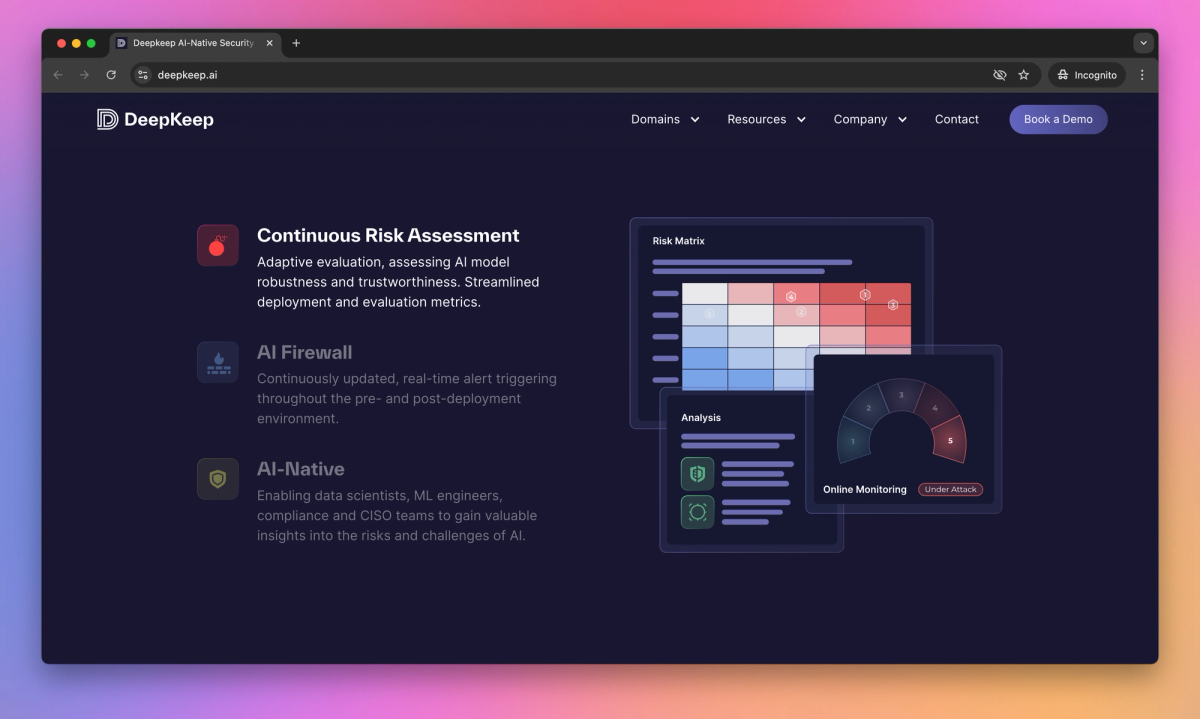

- Evaluates AI model resilience through penetration testing, identifying potential vulnerabilities in security, trustworthiness, and privacy.

Risk Assessment Module

- Provides real-time alerts and protection against attacks on AI applications, covering a wide range of security and safety categories.

Continuous Monitoring

- Secures various AI modalities including Large Language Models (LLMs), computer vision, and tabular data models.

Multi-Modal Protection

- Detects and mitigates issues related to bias, fairness, toxicity, and data leakage to ensure AI models meet ethical and regulatory standards.

Compliance and Ethics Checks

Pricing

AI-Native Security

Continuous Risk Assessment

AI Firewall

Protection for multimodal AI including LLM, vision & tabular data

Security and trustworthiness for holistic protection

Realtime detection, protection and inference