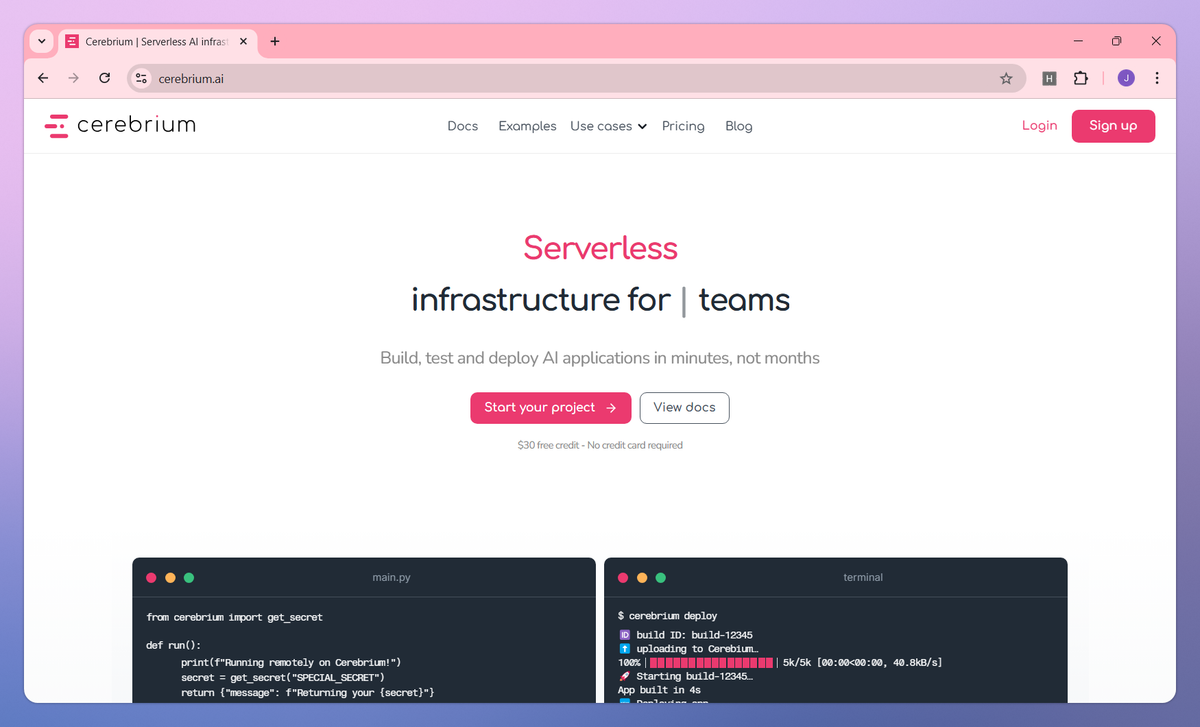

What is Cerebrium?

Cerebrium is a serverless AI infrastructure platform that helps developers deploy and scale machine learning applications. It processes requests in milliseconds, offers pay-per-use pricing down to the millisecond, and automatically scales resources based on demand to help machine learning engineers and data scientists deploy models without managing complex infrastructure.

What sets Cerebrium apart?

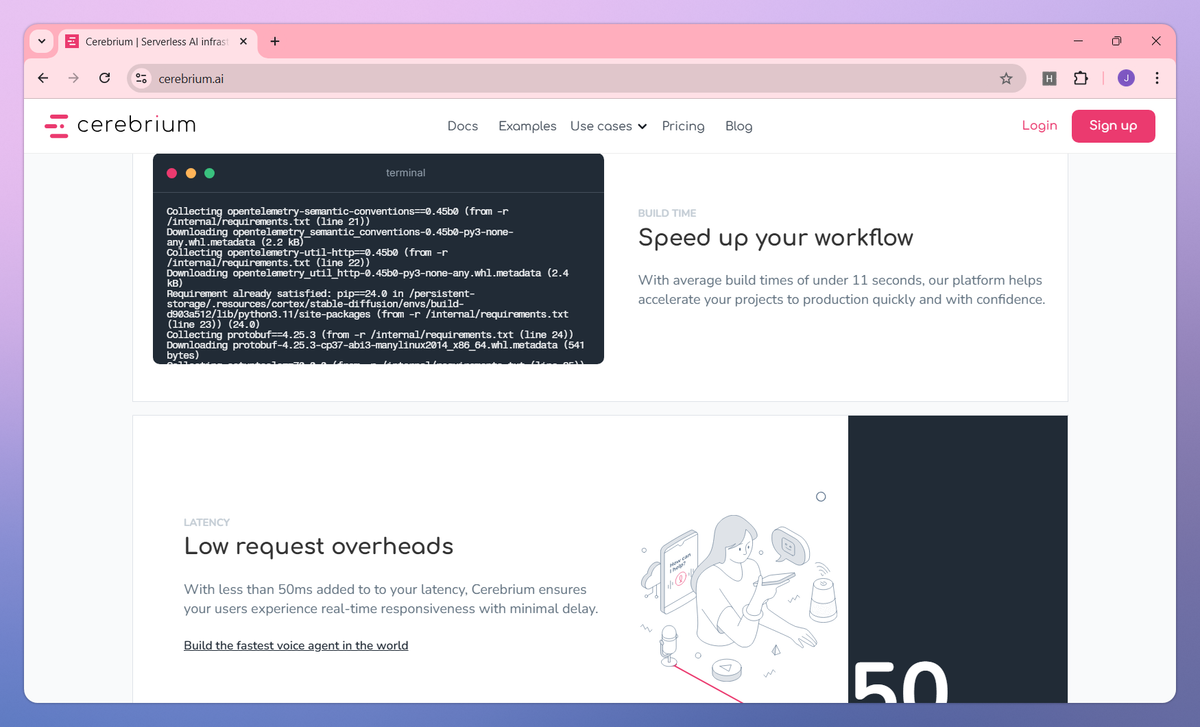

Cerebrium sets itself apart with an extensive selection of GPU hardware options including H100s and A100s, allowing machine learning engineers to match specific workloads with ideal computing resources. The platform's cold start times of under 5 seconds enable data scientists to iterate and deploy models to production with unprecedented speed. Cerebrium's batching capabilities maximize GPU throughput, reducing costs while maintaining low latency for mission-critical AI applications.

Cerebrium Use Cases

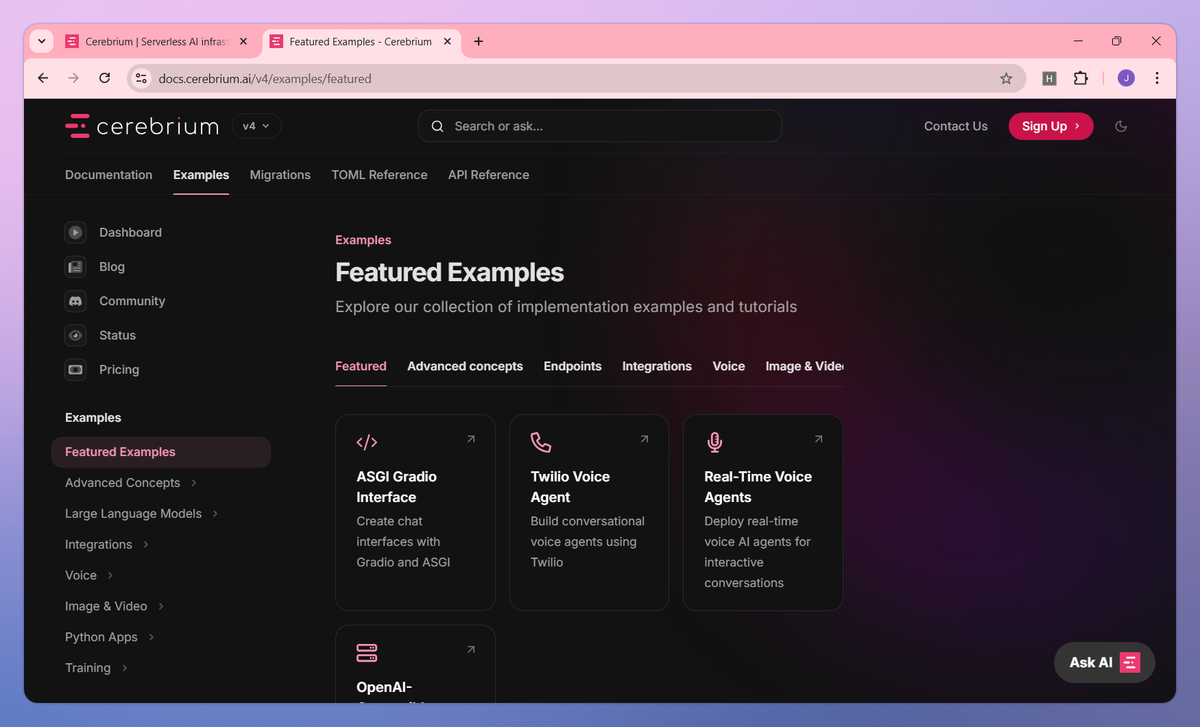

- ML model deployment

- Serverless GPU computing

- Real-time ML inference

- ML infrastructure automation

Who uses Cerebrium?

Features and Benefits

- Deploy machine learning models with cold starts under 5 seconds, eliminating long waiting periods and enabling immediate response to user requests.

Blazing-Fast Cold Starts

- Pay only for the compute resources used down to the millisecond, with no charges for idle time or unused capacity.

Pay-Per-Use Pricing

- Access a wide range of GPUs from NVIDIA H100s to A100s, matching the right hardware to specific workload requirements.

Advanced GPU Selection

- Scale from 1 to 10,000+ concurrent requests automatically, handling traffic spikes without manual intervention.

Automatic Scaling

- Deploy machine learning applications with minimal configuration through an intuitive CLI and dashboard interface.

Simple Deployment Process

Cerebrium Pros and Cons

Makes GPU inference setup significantly faster and easier

Provides cost savings compared to alternative deployment options

Has a very helpful and responsive customer support team

Simplifies the deployment process with serverless infrastructure

User interface needs more intuitive design improvements

Lacks advanced customization options for complex deployments

Documentation could be more comprehensive

Monitoring and logging features need enhancement

Pricing

Free Trial3 user seats

Up to 3 deployed apps

5 Concurrent GPUs

Slack & intercom support

1 day log retention

Everything in Hobby plan

10 user seats

10 deployed apps

30 Concurrent GPUs

30 day log retention

Everything in Standard plan

Unlimited deployed apps

Unlimited Concurrent GPUs

Dedicated Slack support

Unlimited log retention