What is Patronus AI?

Patronus AI is an automated evaluation platform for large language models that helps companies catch mistakes and hallucinations in AI outputs. It provides performance score predictions for commercial models, fine-tuned LLMs, and pretrained models, allowing businesses to deploy AI applications with greater confidence.

What sets Patronus AI apart?

Patronus AI stands out with its proprietary evaluation models, which go beyond basic error detection. Data scientists and AI engineers can leverage these models to generate synthetic adversarial datasets, pushing their LLMs to new limits. Patronus AI's approach focuses on continuous improvement, helping teams refine their AI systems over time.

Patronus AI Use Cases

- LLM evaluation

- AI safety testing

- Hallucination detection

- Production AI monitoring

Who uses Patronus AI?

Features and Benefits

- Score model performance based on proprietary criteria to assess AI system quality and reliability.

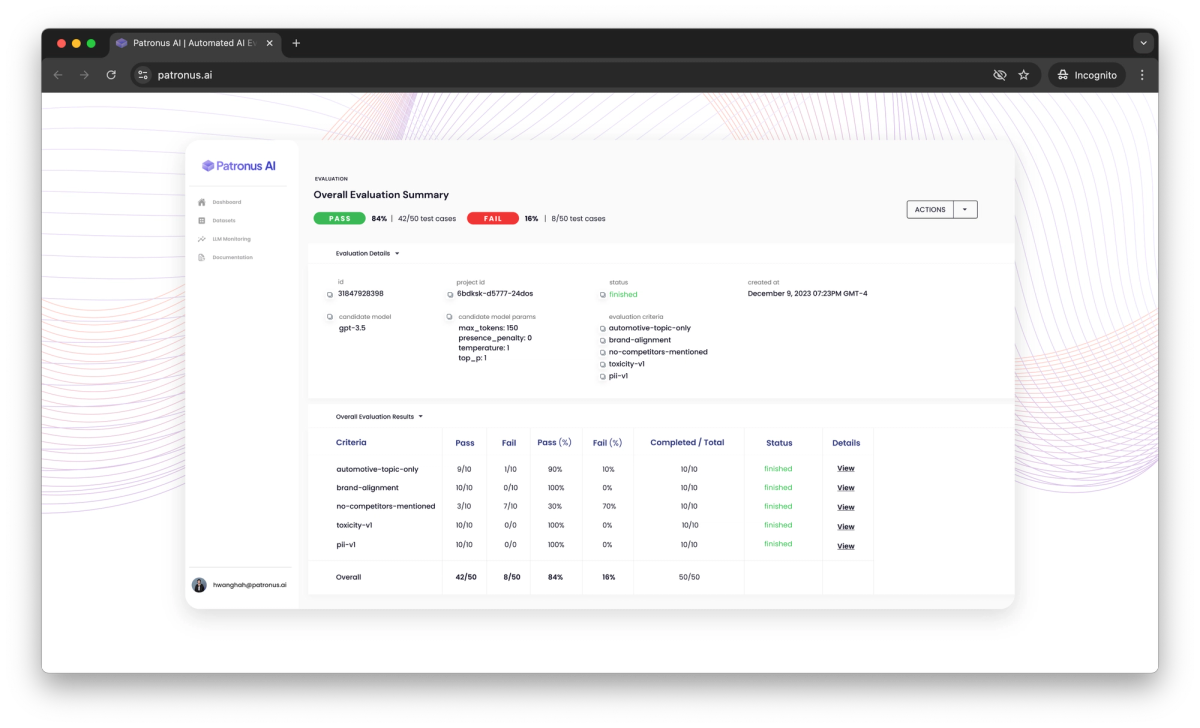

Evaluation Runs

- Continuously evaluate and track LLM performance in production using the Patronus Evaluate API.

LLM Failure Monitoring

- Auto-generate novel adversarial testing sets to identify edge cases where models fail.

Test Suite Generation

- Verify AI models deliver consistent, dependable information with RAG and retrieval testing workflows.

Retrieval-Augmented Generation Analysis

- Compare models side-by-side to understand performance differences in real-world scenarios.

Benchmarking

Pricing

Enterprise Price not available

Custom pricing

Book A Call option available