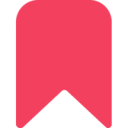

Promptmetheus — AI Prompt Engineering Tool

What is Promptmetheus?

Promptmetheus is a prompt engineering tool that helps AI developers compose, test, and optimize prompts across multiple language models. It offers features like modular prompt design and cross-platform testing, allowing developers to create more effective prompts for tasks such as extracting emotions from journal entries.

What sets Promptmetheus apart?

Promptmetheus sets itself apart with its AIPI endpoints, allowing AI developers to deploy their fine-tuned prompts directly into applications without manual integration. This feature proves particularly useful for teams building chatbots or virtual assistants, as it simplifies the process of updating and managing prompts in production environments. By offering real-time collaboration tools, Promptmetheus also enables prompt engineering teams to work together on complex projects, fostering better communication and faster iteration.

Promptmetheus Use Cases

- Compose prompts

- Test prompts

- Optimize prompts

- Deploy prompts

Who uses Promptmetheus?

Features and Benefits

- Break prompts into modular blocks for easy composition and experimentation.

Prompt Composability

- Test prompts across various LLM providers and models from a single interface.

Multi-Platform Support

- View prompt performance statistics and insights to optimize results.

Performance Analytics

- Work together with team members on shared prompt projects in real-time.

Real-Time Collaboration

- Deploy tested prompts directly to dedicated AI endpoints for integration into applications and workflows.

AIPI Deployment

Promptmetheus Pros and Cons

Allows easy toggling between different LLM providers

Provides an intuitive interface for prompt engineering

Offers real-time collaboration features

Enables quick brainstorming and fine-tuning of prompts

Supports multiple language modes

Includes advanced debugging capabilities

Facilitates easy integration with other platforms

Limited availability of LLM models compared to standalone services

Steep learning curve for new users

Confusing subscription model

Lack of direct control over some LLM parameters

User interface can be overwhelming for beginners

Pricing

Free TrialForge

Local data storage

OpenAI LLMs

Stats & Insights

Data import / export

Community support

Archery

Cloud sync

All APIs and LLMs

Stats & Insights

Projects

History and full traceability

Data export

Standard support

Archery

All Single features

Shared projects

Shared prompt library

Real-time collaboration

Business support

Archery

All Team features

Deploy prompts to AIPI endpoints

AIPI versioning and monitoring

Premium support