What is Pinecone?

Pinecone is a vector database that powers AI applications with semantic search and knowledge retrieval capabilities. It stores vector embeddings from language models, performs similarity searches at scale, and integrates with frameworks like LangChain to help developers and data scientists build applications that understand meaning beyond exact keyword matches. With Pinecone, machine learning engineers can create more accurate AI chatbots, knowledge bases, and search systems.

What sets Pinecone apart?

Pinecone distinguishes itself with a serverless architecture that separates storage, reads, and writes to manage scaling without operational overhead. This distributed approach helps data scientists and ML engineers maintain sub-second query speeds even when working with billions of vectors across multiple data centers. Pinecone supports multi-cloud deployment across AWS, GCP, and Azure, giving organizations flexibility to align with existing infrastructure while maintaining consistent performance.

Pinecone Use Cases

- Vector similarity search

- RAG knowledge bases

- Recommendation systems

- Semantic document retrieval

- AI chatbot memory

Who uses Pinecone?

Features and Benefits

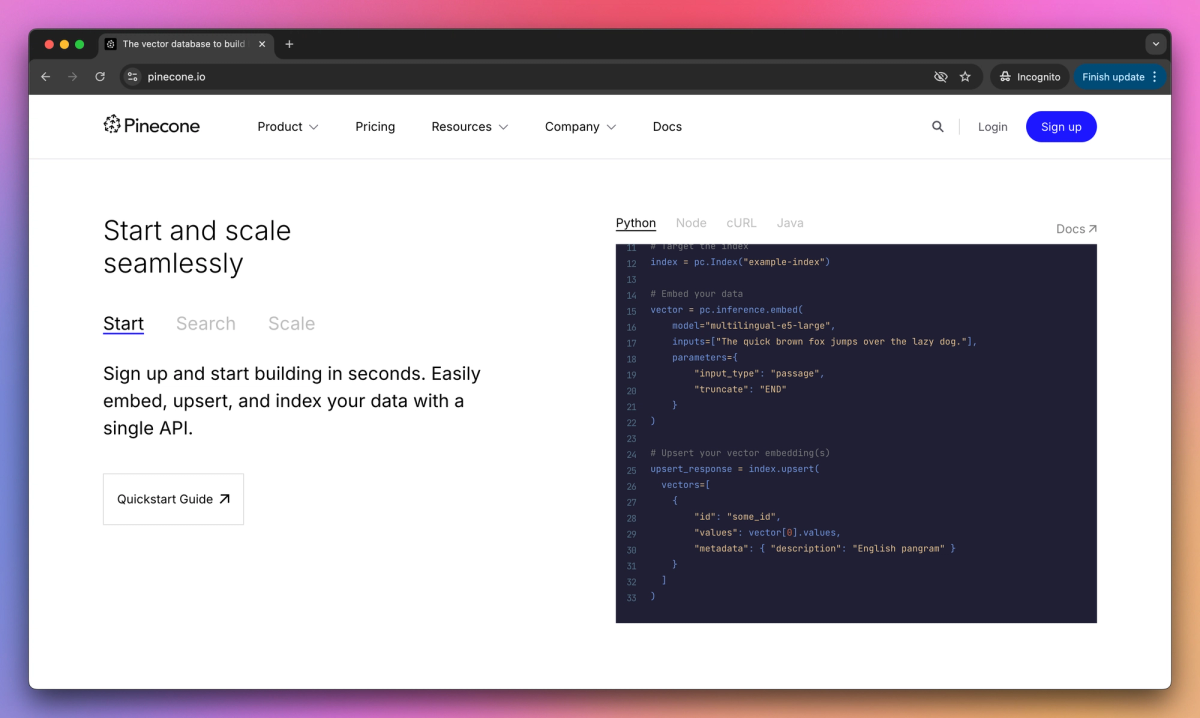

- Store and query vector embeddings to enable semantic search and retrieval augmented generation for AI applications.

Vector Database

- Automatically scales resources based on usage patterns without requiring infrastructure management.

Serverless Architecture

- Works with embeddings from any model or provider, including hosted options through the Inference API.

AI Model Integration

- Combines vector similarity with metadata filtering to deliver more relevant search results.

Hybrid Search

- Provides data encryption, access controls, and compliance certifications for secure AI deployments.

Enterprise Security

Pinecone Pros and Cons

Extremely fast query and indexing performance even at large scale

Simple API integration and quick setup process

Highly reliable with minimal downtime

Serverless option offers cost-effective scaling

Excellent documentation and customer support

Expensive pricing for larger-scale production usage

Limited datacenter regions and hosting options

Lacks some advanced security features like MFA

Challenging metadata management and schema changes

No self-hosted or on-premises deployment option

Pricing

Free TrialServerless indexes

Inference

Assistant

Console Metrics

Community Support

Unlimited Serverless usage

Unlimited Inference and Assistant usage

Choose your cloud and region

Import from object storage

Multiple projects and users

RBAC

Backups

Prometheus metrics

Free support

Response SLAs available via Developer/Pro support add-on

Everything in Standard

99.95% Uptime SLA

Single sign-on

Private Link

Customer Managed Encryption Keys

Audit Logs

Pro support included